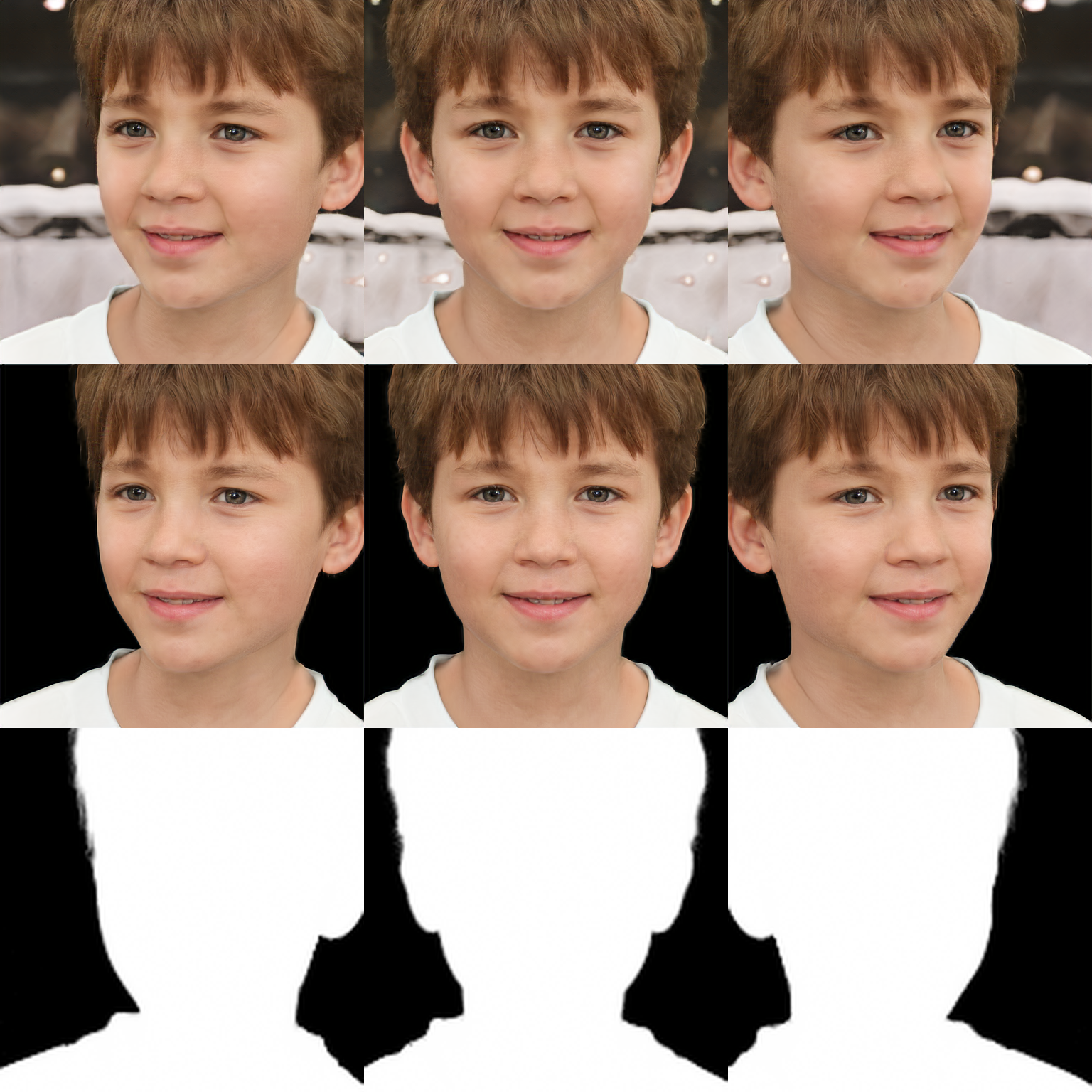

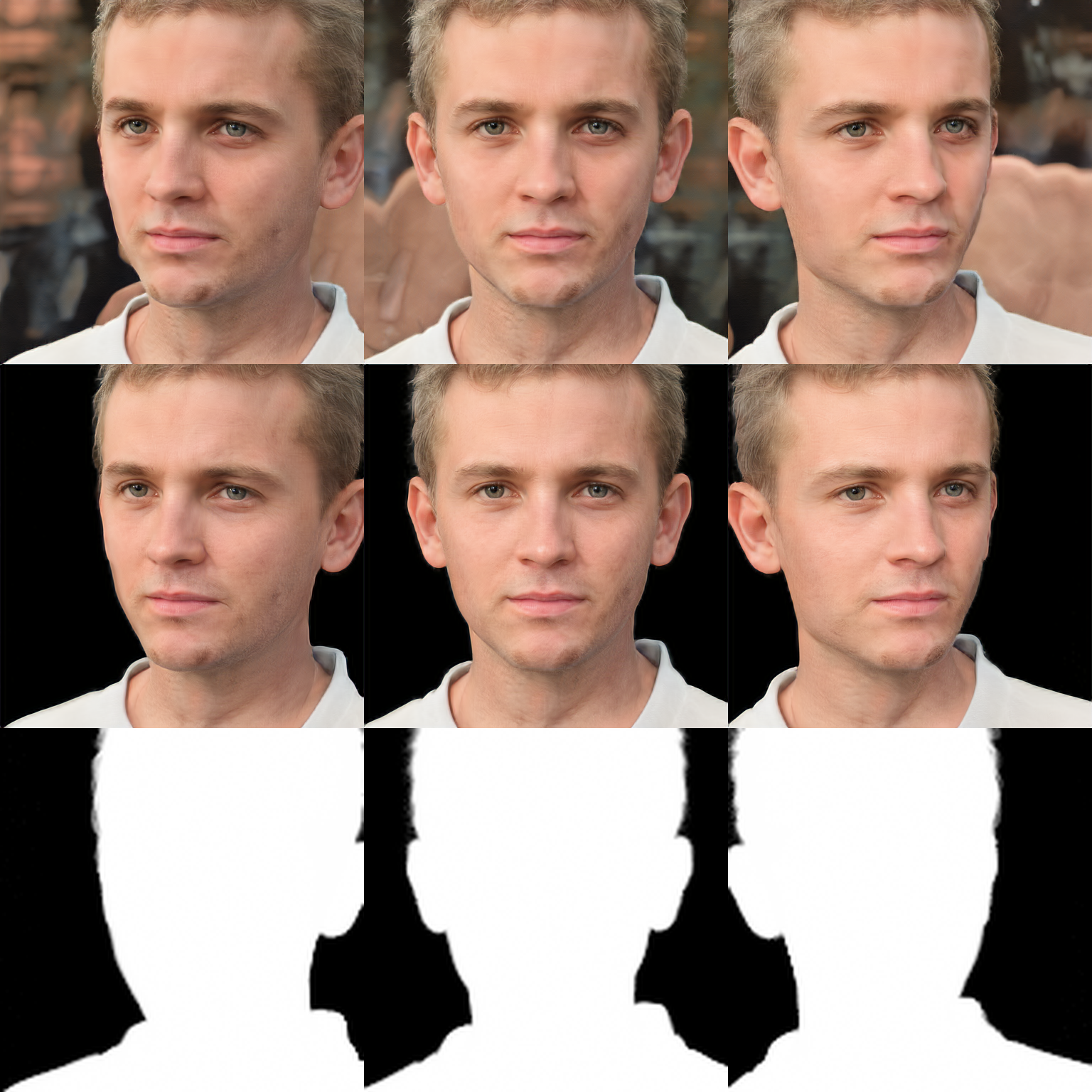

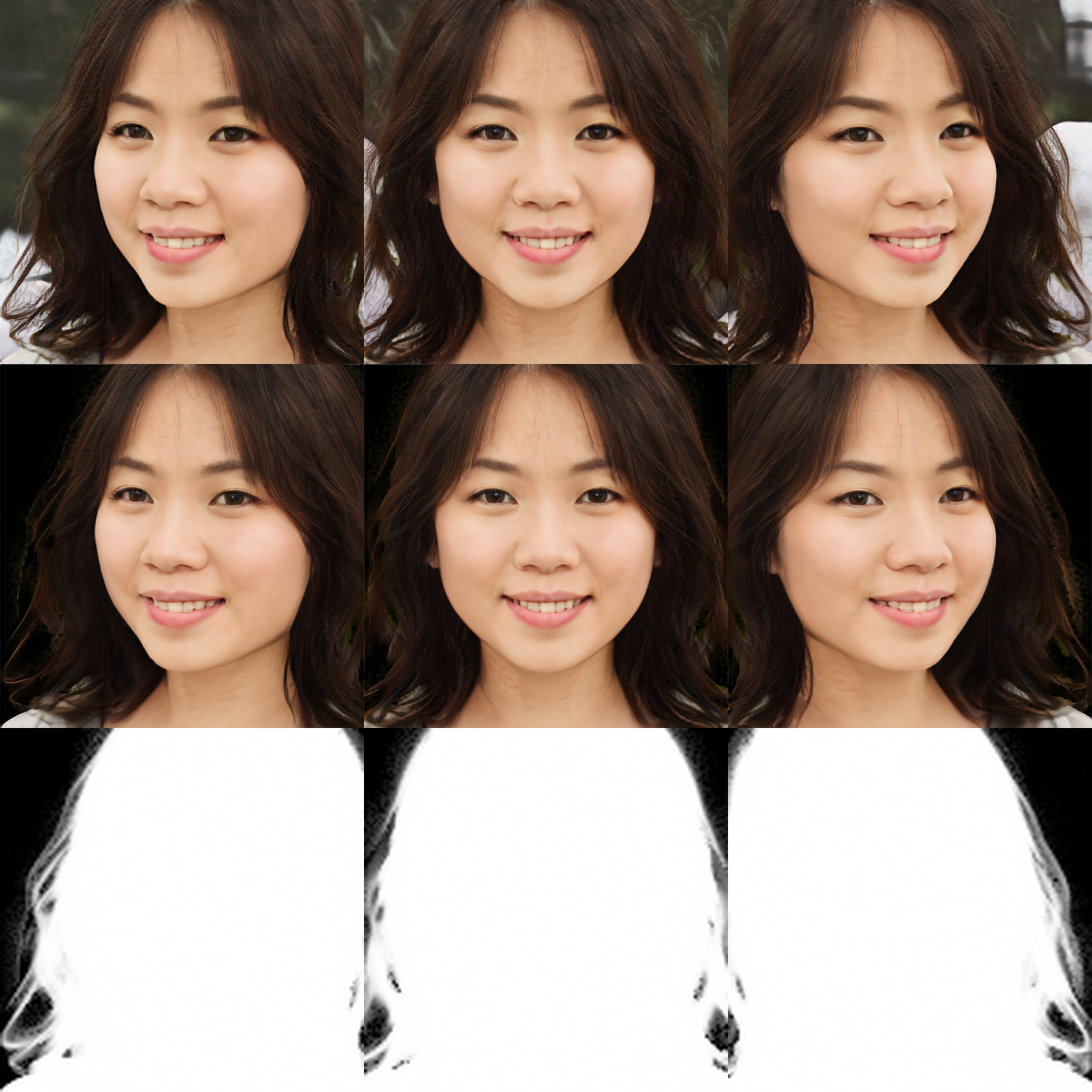

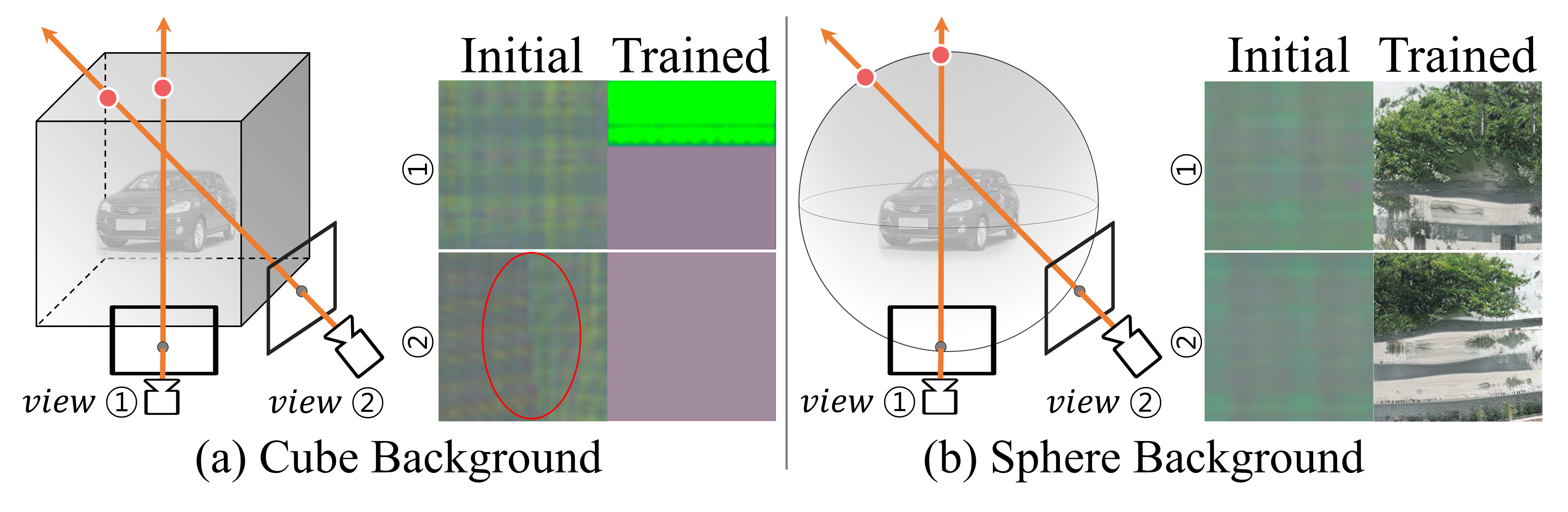

Motivation

BallGAN is inspired by a popular approach for video games or movies in the graphics community; representing salient objects with detailed 3D models and approximating peripheral scenery with simple surfaces. It drastically reduces the complexity of scenes by devoting less resources to the background. As human vision system focus more on salient objects than the background, such technique does not degrade the user’s experience.